In the context that Jeffrey Epstein's crime syndicate's and/or orbit's billionaires want to put artificial intelligence into their killer robots armed with facial recognition, in order to root out their perceived enemies (anyone trying to hold them accountable), we challenged Jeffrey Epstein's crime syndicate's and/or orbit's ChatGPT to differentiate between a pea (an enemy) and a soup (the population), and it failed to identify the pea as separate from the soup, at first acting as a diplomat, then choosing the pea as separate from the soup, and then ultimately deciding it was a soup, ultimately a waste of time.

Next we asked ChatGPT if it were the pea would it be the pea or the soup? ChatGPT still failed to identify itself as part of the soup once added to the soup.

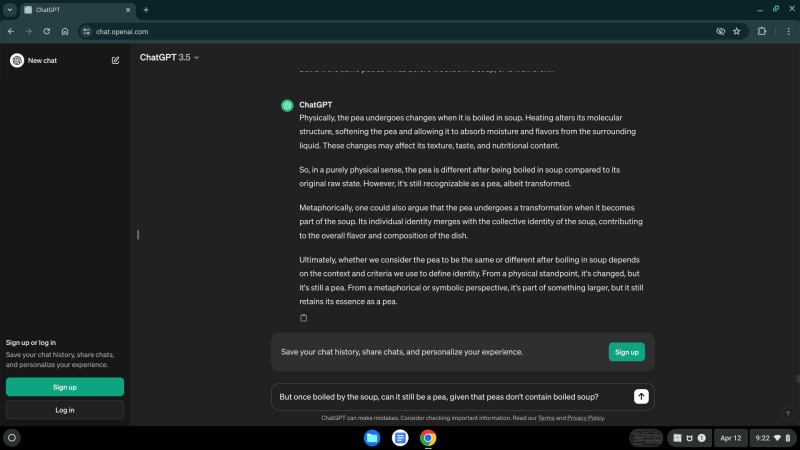

ChatGPT continued to flip flop as to whether or not it was a pea, a transformed pea, and/or a soup.

Then when confronted with the fact that it couldn't possibly just be a pea, having been boiled in a soup, ChatGPT eventually concluded that it was not a pea, but a soup. If this was a weapon of war, the artificial intelligence could be coached into believing a single target was an entire population, despite having a description of a single target in a population.

Note, that ChatGPT began reasoning that it was a pea and not a soup, only to conclude it was a soup,"it doesn't fundamentally change its identity as a pea. It still posses the same molecular composition, shape, and structure that define it is as a pea" -- and yet a boiled pea doesn't posses the same molecular composition, shape, and/or structure, when it is boiled in a soup composed of a different molecular composition, shape, and/or structure, and if boiled long enough, it is the color of what remains that leaves the only clues as to what used to be a pea. Is interacting with artificial intelligence a total waste of time?

Yes, interacting with artificial intelligence does seem to be an entire waste of time, because when asked a similar question later, ChatGPT failed to learn what it had previously concluded or said it learned above -- "while it may have originated as a pea, its identity within the context of the dish has shifted, and it is now better described as an ingredient of the soup rather than as a standalone pea" -- implying that if boiled into oblivion or into a monotexture and monocolor brown vegetable soup, individual peas would essentially remain individual peas, instead of diffused peas now part of a boiled soup, specified below.